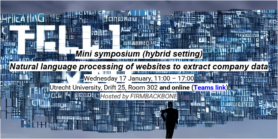

Symposium 2024 – Natural language programming of websites to extract company data

Held on 17 Januari 2024 at Utrecht University and online.

Background:

Nowadays, scraped data from websites of companies are used for statistical analyses, in particular through natural language processing. For instance, the development of FIRMBACKBONE, https://firmbackbone.nl/, will lead to the availability of scraped data from websites of Dutch companies.

Aim:

The aim of this mini symposium is to address and discuss core questions for using NLP for firm data. The end product is a collectively written paper by participants in preparation for Organizational Research Methods (ORM) aimed to fit this call for papers.

- Can we create reliable firm data using NLP methods for research and official statistics?

- What are best practices to achieve this?

- What are caveats in creating datasets?

- How are other researchers dealing with these challenges?

Presentations:

- From company websites to business research: Beyond words by Josep Demènech – Universitat Politecnica de Valencia Download Presentation

- Abstract:

In the rapidly evolving landscape of business research, the significance of company websites as a rich source of information is often underemphasized. This presentation gives a comprehensive overview of the methods for extracting and analyzing both textual and non-textual information from these websites. The approach begins with the construction of the sample to be studied, addressing two critical challenges: the relationship between companies and websites is not one-to-one, and the prevalent issues of quality and completeness in directories of company websites.Once the list of company homepages is defined, the crawling process begins. Common issues regarding web crawling are technical, such as expired domains and JavaScript-intensive sites, while others are related to defining what constitutes a website and its domains, as well as ethical considerations.Crawled content can be analyzed from different perspectives to learn about the company. Text content can be analyzed with simple Natural Language Processing (NLP) techniques, such as the Bag-Of-Words, or more sophisticated methods involving embeddings or encoders. Moreover, the analysis extends beyond textual content. The underlying website code itself is a valuable source of information, revealing the technological choices and preferences manifested in the company’s external digital interface.Furthermore, NLP techniques facilitate a dynamic analysis of company websites. While static content provides a snapshot of company attributes, tracking changes over time in the website’s content can offer a more profound understanding of the company’s evolution and behavioral dynamics. This longitudinal approach enables a deeper comprehension of how companies adapt and modify their online presence in response to varying internal and external factors.

- Abstract:

- A look at the textual analysis pipeline for company data: from the sample extraction to the model calibration by Paolo Mustica – Università degli Studi di Messina Download Presentation

- Abstract:The analysis of unstructured texts has gained importance in Economics in the last few years. Indeed, institutions and researchers are increasingly interested in non-financial data. For example, since 2017 the Non-Financial Reporting Directive has provided a uniform regulatory framework for non-financial information of large listed companies in the European Union. Since we are talking about unstructured data, it becomes important to standardize the procedures for extracting information from the text. This presentation will focus on the non-financial reports of companies included in the Italian market index (FTSE MIB) to discuss the various steps of the textual analysis pipeline, from the extraction of the sample on which the classification model will be tested to the calibration of the model.

- Exploring Corporate Digital Footprints: NLP Insights from WebSweep’s High-Speed Web Scraping by Javier Garcia Bernardo and Peter Gerbrands – Utrecht University View Presentation

- Abstract:FIRMBACKBONE’s effort to provide longitudinal information on Dutch companies for scientific research and education includes scraping corporate websites. For this purpose we have developed WebSweep, a state-of-the-art web scraping library to scrape (corporate) websites. It enables scraping websites at a large scale and pre-processes the retrieved data to avoid excess storage requirements and to support FIRMBACKBONE’s corporate identification strategy. We present comprehensive information on the performance of WebSweep, in terms of speed and quality of the results, by linking the scraped data to diverse third party sources. Finally, we highlight and present preliminary results on some potential applications.

- Company-level indicators from online data sources by Patrick Breithaupt – Leibniz Centre for European Economic Research [Presentation NA]

- Abstract:Today, a large share of companies has a publicly accessible website that may contain valuable information. Since 2018, this data source has been exploited through the creation of the Mannheim Web Panel (MWP). I provide an overview of the MWP that contains data on around one million companies in Germany. The scraped data are linked to the Mannheim Enterprise Panel (MUP) that comprises all economically active companies in Germany and provides company-level information, such as the number of employees and location. However, the website data only form a part of our company-level data sets from the internet: Other data sources include online platforms, such as Facebook, Kununu and XING. Here, I rely again on web scraping, but also on co-operations with established companies. As examples of company-level data from online data sources, I give a brief overview of indicators based on natural language processing and machine learning methods. These include economic indicators, such as, the innovative capacity of companies, expenditures for on-the-job training and the company-level degree of digitalisation. Drawing on experience in this field, I shed light on the prerequisites, hurdles, best practices and some limitations that are affiliated with the collection and processing of web data as well as the creation of web-based company indicators.